Contents Science Lab

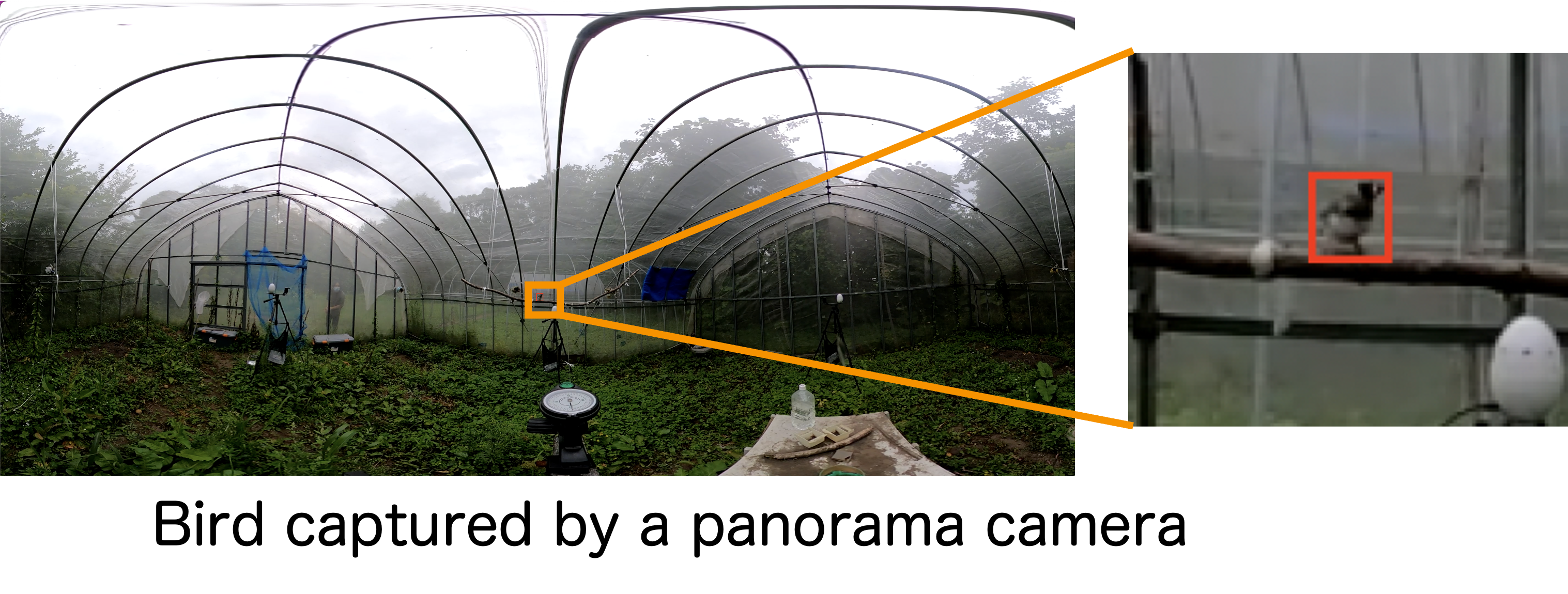

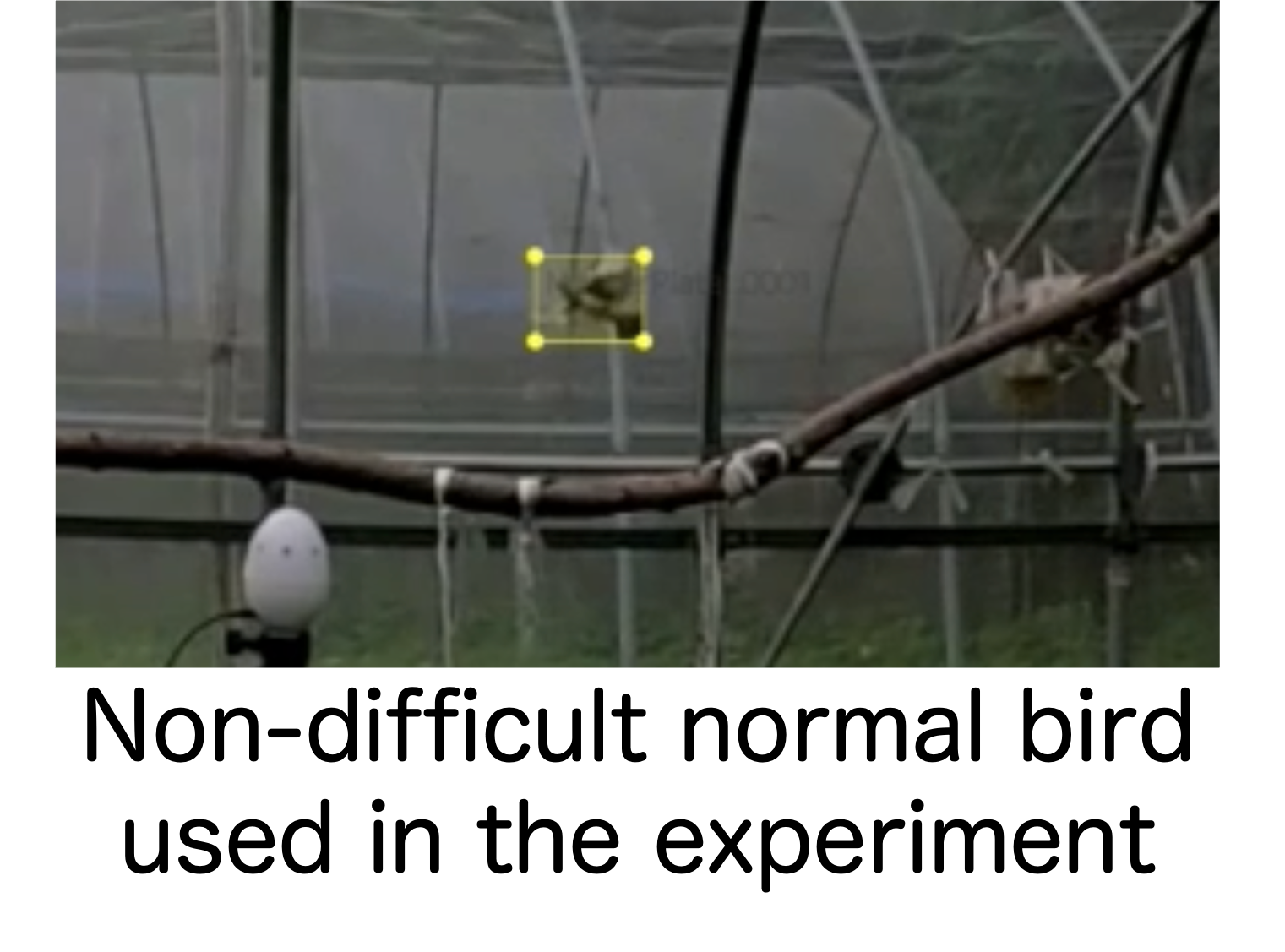

This is a dataset composed of multi-channel audio data and panorama image sequence recorded for approximately 8 minutes in a closed 3D environment with five Sunda zebra finches (Taeniopygia guttata), at a facility in Hokkaido University, located in Sapporo, Japan. Ground-truth bounding box surrounding each bird in the panorama image sequence is manually annotated.

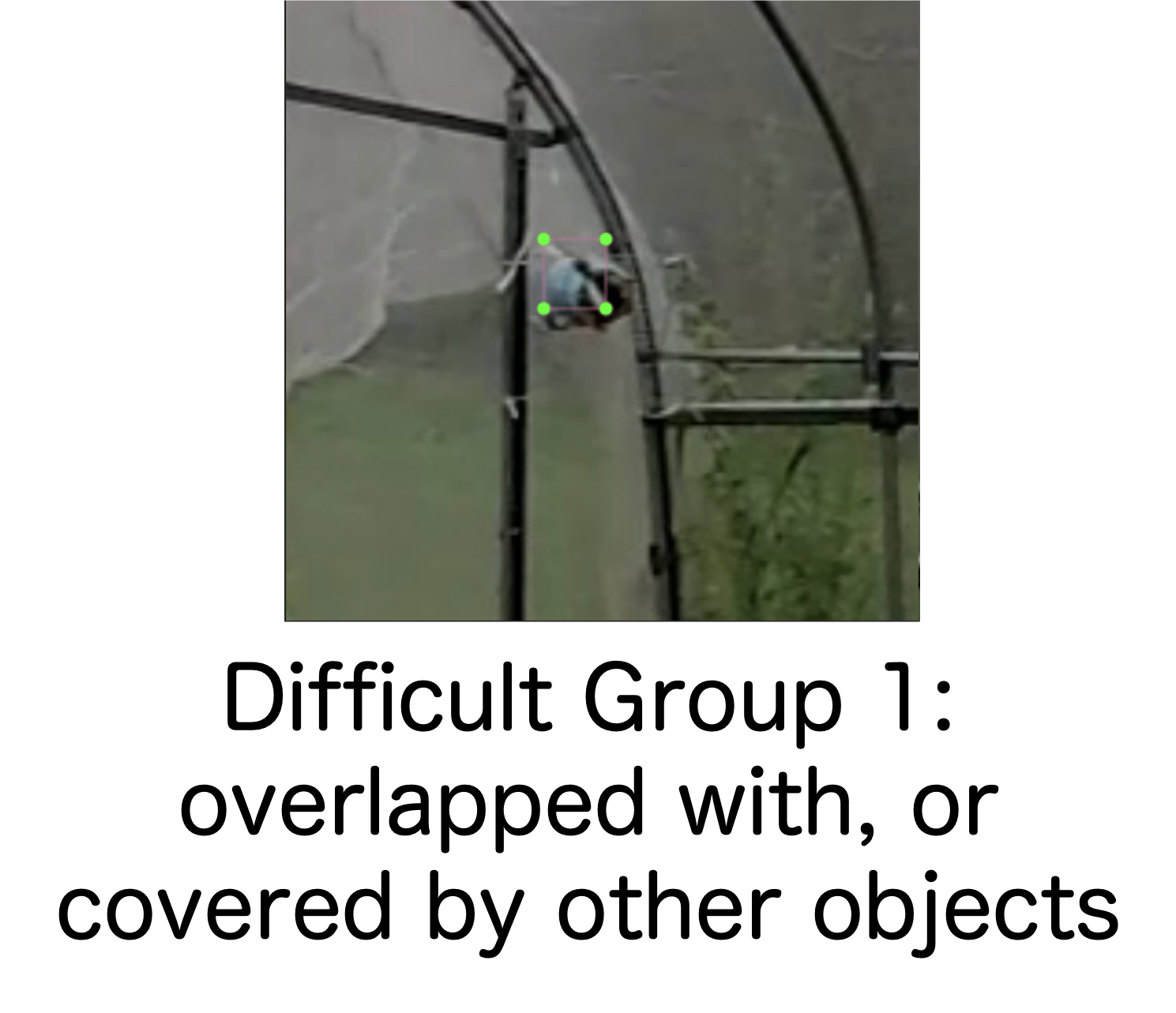

annotationoutput.json: Annotation json filetxt_anno: Converted text annotation files. Each file name corresponds to the frame number. Each file is composed of lines: x1, y1, x2, y2, difficult, occlusion, truncated.audiogopro_sample.wav: Audio data recorded by GoPro.GS010046.wav: Multi-channel audio data recorded by TAMAGO-03.framevideoGS010046.360: Raw video recorded by GoPro.GS010046.mp4: Converted mp4 video using GoPro tool.frame images.

difficult, occlusion, and truncated, and normal (no difficulty tag), respectively. |  |  |

@article{Sumitani:Birds2021,

author = {Sumitani, Shinji and Suzuki, Reiji and Arita, Takaya and Nakadai, Kazuhiro and Okuno, Hiroshi},

title = {Non-invasive monitoring of the spatio temporal dynamics of vocalizations among songbirds in a semi free flight environment using robot audition techniques},

journal = {Birds},

volume = {2},

number = {2},

pages = {158--172},

month = {April},

year = {2021}

}

@inproceedings{Kawanishi:AVSS2022,

author = {Kawanishi, Yasutomo and Ide, Ichiro and Chu, Baidong and Matsuhira, Chihaya and Kastner, Marc A. and Komamizu, Takahiro and Deguchi, Daisuke},

title = {Detection of birds in a 3D environment referring to audio-visual information},

booktitle = {Proc. 18th IEEE Int. Conf. on Advanced Video and Signal-based Surveillance (AVSS2022)},

pages = {TBA},

month = {December},

year = {2022},

address = {Madrid, Spain / Online}

}

This dataset was prepared as parts of JSPS/MEXT Grants-in-aid for Scientific Research JP21K12058, JP20J13695, JP20H00475, JP19KK0260, JP18K11467, and JP17H06383 in #4903 (Evolinguistics).

The audio and video data were recorded in cooperation with Professor Kazuhiro WADA at Graduate School of Science, Hokkaido University. Animal experiments were conducted under the guidelines and approval of the Committee on Animal Experiments of Hokkaido University. These guidelines are based on the national regulations for animal welfare in Japan (Law for the Humane Treatment and Management of Animals with partial amendment No.105, 2011).

faculty+ [at] cs [dot] is [dot] i [dot] nagoya-u [dot] ac [dot] jp