Contents Science Lab

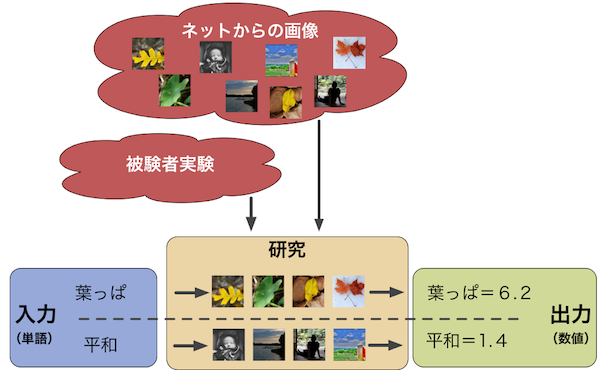

In these projects, we look into the semantic gap in terms of human perception of concepts, and apply related ideas to multimedia applications.

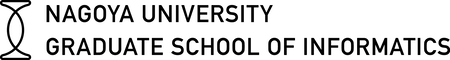

In this research, we colorize pages of books according to human perception in order to summarize and compare book contents.

Main project members: Chihaya Matsuhira, Dr. Marc A. Kastner

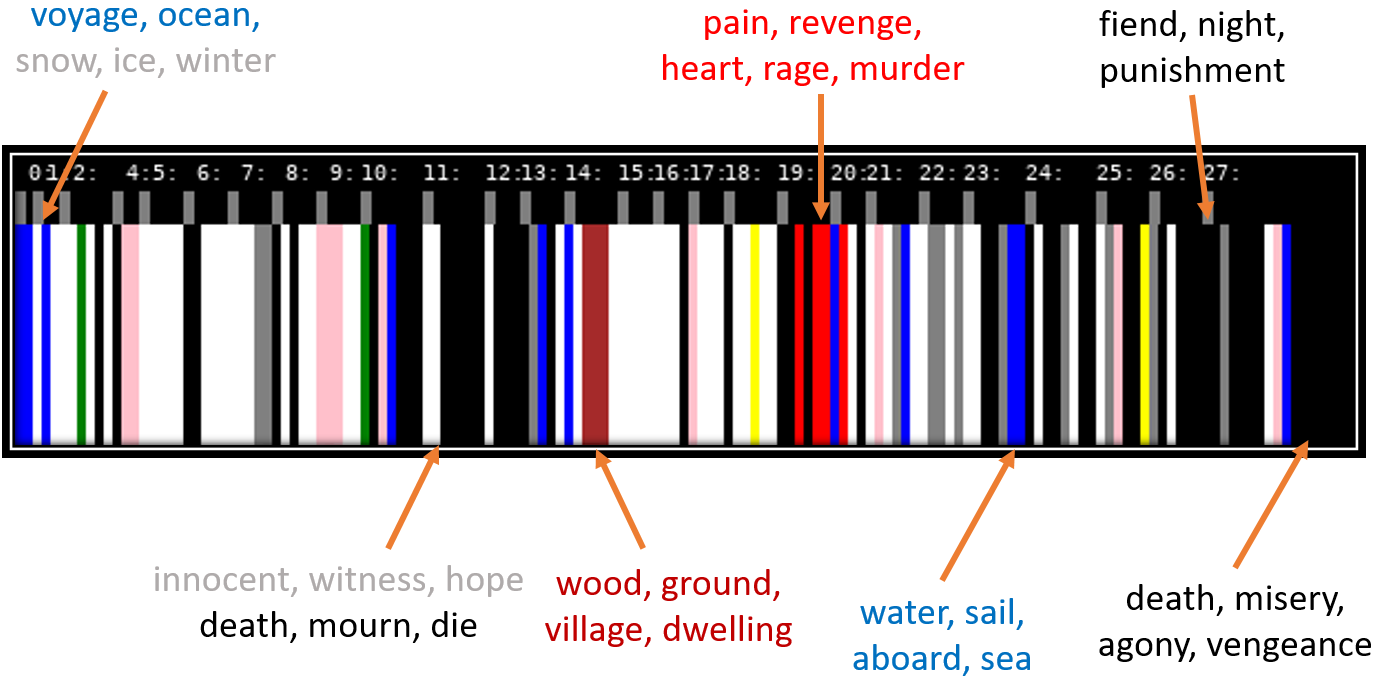

In this research, we incorporate psycholinguistics into image captioning in order to tailor captions to different applications.

Main project members: Kazuki Umemura, Dr. Marc A. Kastner

In this research, we model the human perception of the semantic gap for use in affective computing.

Main project members: Chihaya Matsuhira, Dr. Marc A. Kastner

In these projects, we analyze the relationships between visual data and phonetics.

In this research, we analyze the phonetic characteristics and human perception of mimetic words used in Japanese to describe human gait.

Main project members: Dr. Hirotaka Kato

In these projects, we do research on food images, videos and recipes.

In this research, we analyze the attractiveness of food images using image analysis, including gaze information to improve the results.

Main project members: Akinori Sato, Kazuma Takahashi, Dr. Keisuke Doman

In this research, we analyze the typicality of food images within a food category.

Main project members: Masamu Nakamura, Dr. Keisuke Doman

In these projects, we analyze use geographic and location data of Social Media contents for multimedia applications.

In this research, we analyze methods for the detection of similar geo-regions based on Visual Concepts in social photos.

Main project members: Hiroki Takimoto, Lu Chen

In these projects, we target video or document summization using additional knowledge from multimedia corpora.

In this research, we develop an automatic authoring method of video biography using Web contents.

Main project members: Kyoka Kunishiro