Contents Science Lab

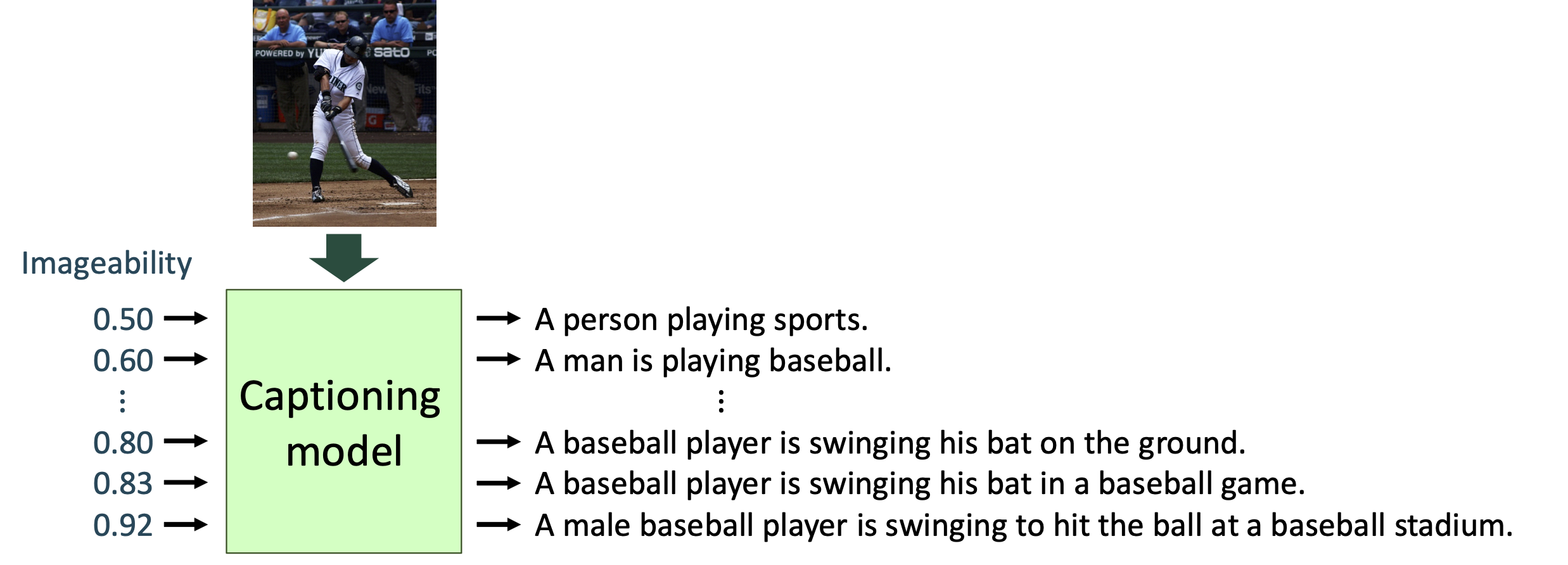

In this research, we aim to generate image captions tailored to the actual usage of image captions. To achieve this, we consider psycholinguistic measurements during the generation of captions.

For example, in order for visually impaired people to understand the image content, captions that describe the image content in as much detail as possible are preferred. On the other hand, for images in news articles, captions that include the content of the news article are preferred to the description of the image content.

There are various situations in image captions used in the real world, and the desired properties differ depending on each application. Aiming to generate captions according to these, Ide Laboratory is working on image captioning that freely adjusts the details of the explanation of the image contents.

Therefore, consider “Imageability”, which is a measurement of showing the ease of imagining a word’s content. Including this into caption generation, it is possible to tailor the caption to an intended degree of visualness. In the resulting model, if you input an image into the caption model and specify a low value, a concise caption will be generated. In contrast, if you specify a high value, a caption that describes the image content in detail will be generated.

Finished Master in AY2020

Cooperative Research Fellow (Hiroshima City University)